Source: Drew Graham

The “Filter Bubble”, a friend or foe?

Tailoring search results and news towards who you are and what you do as an individual can be marked as one of the biggest technological breakthroughs in this digital age. In a recent survey, 57% are OK with providing personal information if it’s for their benefit, while 74% get frustrated when content & ads appear to have nothing to do with their interests (Consumer Perceptions of Social Login Study, 2013).

Source: Original content

Consumers want a system of searching and finding information with minimal effort. However, is the sense of civic responsibility lost due to the lack of transparency with such an algorithm?

Source: Laura Aziz

Users don’t know what gets through these personalised filters. It’s essential to have control over their own filter bubble, as algorithms aren’t programmed with ethics (J. Delaney, 2017). People should seek out factual news that challenges their beliefs and adds different perspectives to subjects no matter how uncomfortable and conflicting it may be.

A functioning democracy only works with a good flow of objective information, yet Social Media increases polarising segregation that only benefits the user’s ideology. This can become disastrous when consumers receive fake news that manipulates their opinion.

A functioning democracy only works with a good flow of objective information, yet Social Media increases polarising segregation that only benefits the user’s ideology. This can become disastrous when consumers receive fake news that manipulates their opinion.

A research project done over nine countries, including America, found that misinformation of propaganda is widespread online and is “supported by Facebook or Twitter’s algorithms”. The results confirm that bots reached positions of measurable influence during the 2016 US election (Woolley & Guilbeault, 2017). Source of side banner: original content

I feel that automated bots create an illusion of online support & popularity that allows political candidates to be eligible where they might not have been before. In addition to fake news emerging as a propaganda tool, bots can also influence political processes of global significance.

Networking sites need implement algorithms that can identify and remove automated bots. Individuals need to be aware of the rising risk of computational propaganda. We need to break out of our filter bubbles, source out factual information and identify censorship and propaganda.

A video explaining the manipulation of social media & disinformation that have led to a decline in Internet freedom.

(Word Count: 304)

References:

Consumer Perceptions of Social Login Study, 2013. Online Consumers Fed Up with Irrelevant Content on Favorite Websites, According to Janrain Study (online). Retrieved from: http://www.janrain.com/about/newsroom/press-releases/online-consumers-fed-up-with-irrelevant-content-on-favorite-websites-according-to-janrain-study/

Woolley & Guilbeault, 2017. Computational Propaganda in the United States of America: Manufacturing Consensus (online). Retrieved from: http://comprop.oii.ox.ac.uk/wp-content/uploads/sites/89/2017/06/Comprop-USA.pdf

How to evaluate website content, 2017. The University of Edinburgh, Using the Internet for Research (online). Retrieved from: https://www.ed.ac.uk/information-services/library-museum-gallery/finding-resources/library-databases/databases-overview/evaluating-websites

J. Delaney, 2017. Filter bubbles are a serious problem with news, says Bill Gates (online). Retrieved from: https://qz.com/913114/bill-gates-says-filter-bubbles-are-a-serious-problem-with-news/

Hi Marianne!

I find your discussion about automated bots interesting! I agree that bots are ever-present in social media and it can cause dissemination of false information. Other than politically-influenced bots, there are useful bots such as company bots to answer to customers queries or bots that help to spread news of a potential disaster. (https://www.cnbc.com/2017/03/10/nearly-48-million-twitter-accounts-could-be-bots-says-study.html)

The concept of social bots makes it harder for individuals to identify if those bots actually bots or human. The aim of this bot is to infiltrate social networks and gains users trust over time, thus making them more effective in its operations of influence. (https://www.observatoire-fic.com/social-bots-new-threats-by-daniel-guinier/)

With the positive use and negative use of bots, the question therein lies in how do we differentiate a trustworthy bot to an unauthentic bot and how that affects our evaluation process when provided with information disseminated by bots?

LikeLiked by 1 person

Hi Nicholas! It’s always refreshing to hear of AI bots that aren’t trying to steal our jobs or destroy humanity! I have experienced some of these customer enquire bots myself and they are sometimes more delightful to work with than our human counterpart. Also, interesting articles about the different kind of AI bots on the net, I had no idea bot attacks could be filled with Malware and Trojan horses.

Yes you are right, social bots infiltrating networking sites to manipulate the public’s perception is despicable and unethical. It is the same type of bots that you can find on Instagram that increases the likes and following of a user. The more likes and followers an influencer has, the more credible he/she appears to be.

I honestly feel that we can’t 100% pinpoint a good bot from a malicious one at first. Bots themselves are getting more sophisticated and becoming significantly harder to spot, but we can start by being aware and spreading awareness about these bots.

There are differences between human accounts and bot accounts. Bots tend to retweet far more often than humans and they also have longer usernames and younger accounts. By contrast, humans receive more replies, mentions, and retweets then artificial intelligence. ” You can read more about it on this website!

https://www.technologyreview.com/s/529461/how-to-spot-a-social-bot-on-twitter/

LikeLiked by 1 person

Hi Marianne,

I stand in agreement with your comment that most with more followers, you’re more credible. This is why people are buying followers on Instagram (or any social media sites) to boost their credibility, especially so for new influencers. The challenge here is for us, users, to scrutinise the account and see if the user is credible or not.

With bots adopting the human-like interactions and sleep cycle, it is more difficult to spot a negative bot. Thanks for the article regarding how to spot a social bot! Really interesting insights other than the superficial way of evaluating!

Just one question though, would having an interaction with a social bot affect your digital credibility?

LikeLike

Hey Marianne,

I enjoyed reading your post! You have drawn out an interesting view on automated bots. I did not know bots could be manipulative in supporting political candidates.

This led me to consider Mdm Halimah, who became Singapore’s 8th President this year. One may think that her journey to presidency seems to be undemocratic because authorities decided her rivals did not meet strict eligibility criteria. While others choose to believe that she was elected due to her merits, as much as the late Mr Lee emphasized on a meritocratic nation. (https://www.theguardian.com/world/2017/sep/13/singapore-first-female-president-elected-without-vote)

Singapore ranks 151 out of 180 in the 2017 World Press Freedom Index. This shows that we have very low media freedom. Do you think that the government would have in some sense manipulated the media to boost Mdm Halimah’s popularity before officially recognised as President? How then should people approach the news on the walkover elections? Do you think it right for the people to trend #NotMyPresident? (http://www.theindependent.sg/notmypresident-trends-in-singapore-after-walkover-announced-halimah-yacob-set-to-become-president/)

LikeLiked by 1 person

Hi Stella! Interesting subject matter about our political justice system. In regards to Mdm Halimah who became Singapore’s 8th President this year, it can be a rather thorny subject for a lot of Singaporeans.

Singapore Press Holdings monopolizes the media coverage for the election of our 8th presidential election both online and on print. It is a corporation created by the merger of two newspaper groups. While it is not government-owned, it is closely supervised by our political leadership.. (Freedom from the press, 2016) Source: http://www.freedomfromthepress.info/resources.html

Perhaps bots could have played a part in the public’s perception of the elected candidates, one example of potential foul play with bots is the Brexit situation in 2016. A study tracked 156,252 Russian accounts which mentioned “#Brexit”, and also found Russian accounts posted almost 45,000 messages pertaining to the EU referendum in the 48 hours around the vote. There is an obvious politically driven motive with an ulterior motive to sway the public’s perception as political parties stand to gain from fake news. I believe that everyone should have the freedom of speech as long as they are legitimate citizens of the country and not bots.

You can read more over here! https://techcrunch.com/2017/11/15/study-russian-twitter-bots-sent-45k-brexit-tweets-close-to-vote/?ncid=rss&utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+techcrunch%2Ftwitter+%28TechCrunch+%C2%BB+Twitter%29&utm_content=Google+UK

LikeLiked by 1 person

Hey Marianne,

Thank you for sharing the links! It is startling that Singapore Press Holdings (SPH) dominates Singapore’s news. To even see that The Straits Times, which I frequent, is also under the scrutiny of political leadership. In future, when I look at online news, I would probably look at sources outside those owned by SPH.

I stand by your view that everyone should have a freedom of speech. Therefore, seeing how countries can be manipulative towards their online media, in order to sway the public’s opinion, is indeed saddening. I believe this means that we need be more mindful of the bots that we may encounter so that we can evaluate online information from a broad view.

LikeLiked by 1 person

Hey Marianne!

I like how you discussed how bots contribute to spreading of fake news which leads to political implications! Nowadays, it is difficult to figure if a social media account is real/fake because bots behave like real users.

You were right that networking platforms need to implement algorithms to solve this issue, and Facebook is doing so! They train machine learning algorithms on common words used in headlines of fake stories, so the system can detect future news with similar words and prevent them from appearing on feeds.

Facebook also resorts to measures like third-party fact checkers like Snopes, to flag doubtful articles and redesigning functions for users to report fake news easily (Check it out on my post!)

However, there is a limit to what these platforms can do. In terms of digital literacy, do you think individuals like us have an important role to play in this issue too?

I would love to hear your thoughts on this 😊

Cheers,

Pay Jian Wen

Source: https://techcrunch.com/2017/10/05/facebook-article-information-button/

LikeLiked by 1 person

Hey Jian Wen! You are absolutely right, bots are getting more sophisticated and becoming significantly harder to spot! Did you know that bots can also be programmed with Malware and Trojan horses to affect a user and cooperate companies?

When bots steal credit card information and personal data, people can sell the information in the black market; create clone credit cards and even black mail the user/company! I’m clutching my pearls as I speak (or credit card for this matter). It is also assuring to know that Facebook has third-party fact checkers like Snopes and users contribution help to flag fake articles.

There are differences between human accounts and bot accounts. Bots tend to retweet far more often than humans and they also have longer usernames, and “younger” accounts. By contrast, humans receive more replies, mentions, and retweets.

However there are limitations as there are now more advanced bots that are less easy to detect (bots hacking into real accounts, humans lending bots their account, etc.). We can’t identify a good bot from a malicious one 100% of the time, but we can start by being aware and spreading awareness about these bots. Fact-checking the source and being unbiased toward a post through critical analysing a post might prevent an unbiased outcome.

Source: https://www.technologyreview.com/s/529461/how-to-spot-a-social-bot-on-twitter/

LikeLiked by 1 person

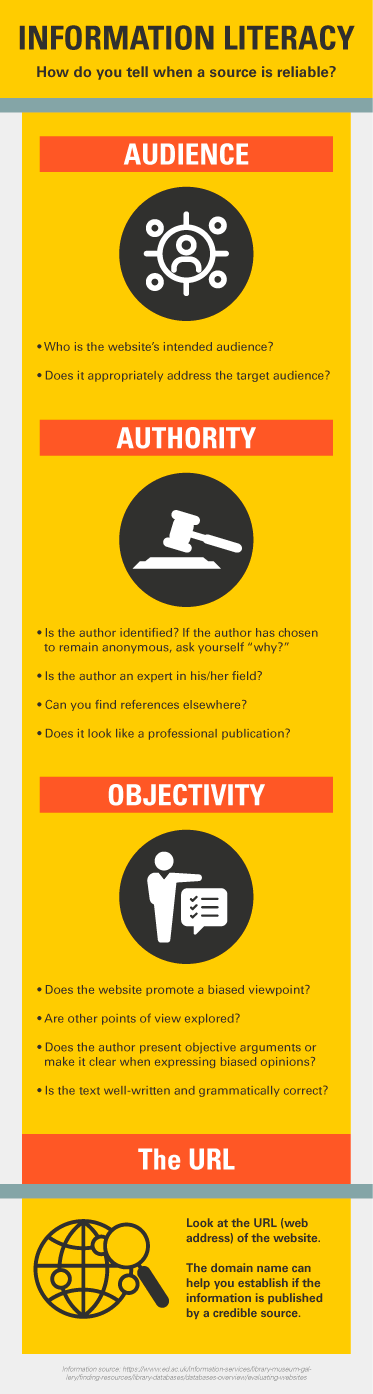

Hi Marianne, love the infographic you’ve created on information literacy. I agree with several points you made in the post, namely the one on filter bubbles and the impact of them. I believe that the filter bubble works hand in hand with several cognitive biases, especially the confirmation bias.(Center For Media Literacy, 2017)

However, a study by Brown University’s Jesse Shapiro and Stanford University’s Matthew Gentzkow suggests that the largest growth for polarization of opinions, especially political ones, comes from a demographic that is least likely to use social media. (Shapiro, Gentzkow, Boxell, 2017) With that in mind, could the impact of filter bubbles on the Internet be exaggerated?

Also, would increasing the individual’s control over filter algorithms cause a wider gap in digital differences between the initiated and uninitiated Internet user?

I’m looking forward to your replies and again, amazing job on the post and infographics!

Kirby Koh Gengshan

Sources:

Click to access Confirmation%20Bias%20and%20Media%20Literacy.pdf

Click to access w23258.pdf

https://news.vice.com/story/social-media-filter-bubbles-arent-actually-a-thing-research-suggests

LikeLiked by 1 person

Hi Kirby, thank you for such a lovely comment! Yes you do have a point about the filter bubble working hand-in-hand with several cognitive biases, as confirmation bias is endemic; it is human nature and it is a subconscious reaction.

Interesting data you have found about the largest growth of polarizing opinions on politics comes from a demographic that is least likely to use Social Media. With that being said, you might be right. This could affect the filter bubble for these groups of people and as their ideologies are being exaggerated and reaffirmed by like-minded individuals like themselves. Furthermore, with the limited time they spent on the Internet and the lack of digital literacy, this could prevent them from looking at the bigger picture.

People gravitate toward information that supports their worldview; whether the information is real or fake often isn’t even on their radar. They are convinced that their worldviews are informed and complete, and that they are are responsible and balanced in their decision-making.

Increasing control over the individual’s filter algorithms will encourage initiated Internet users to gain more perspective outside their comfort zone and in hopes, encourage uninitiated users to see the benefits of breaking free from a filter bubble. However, “some are calling this the Age of Algorithms and predicting that the future of algorithms is tied to machine learning and deep learning that will get better and better at an ever-faster pace” (Rainie & Anderson, 2017). Perhaps our digital differences might amalgamate into a huge pool of information and the digital literacy we know now might differ in the future.

Source: http://www.pewinternet.org/2017/02/08/code-dependent-pros-and-cons-of-the-algorithm-age/

LikeLike

Hi Marianne, I second your thoughts on increasing individual control and responsibility over filter algorithms. Without individual responsibility in content filters and digital literacy, external censorship can often take over and cloud the growth of public knowledge.

However, I would like to seek your views on minors and their role in individual filter algorithms. Thank you so much for taking the time to reply so far, I hope to hear from you again.

Cheers,

Kirby Koh Gengshan

LikeLike

Hello Kirby, as for minors who are still figuring their “voice” & way around the Internet. It is crucial for parents to not only be digitally literate in this field, but to also educated their children on the existence of a filter algorithm. If we can nurture the habit of critical thinking among minors, perhaps we cultivate a generation of individuals rather than having herds of people with “sheep mentality”.

LikeLike

Hey Marianne!

Thank you for your insights about how bots can be programmed with Malware and Trojan horses to commit bad activities like stealing of personal information! This reminds me and other readers that evaluating information online is a thing and the information we put out on the internet is another! It’s encouraging to see the economy improving due to technological advances but how people are exploiting it to acquire personal interests at the expense of others is alarming!

I hope that internet users would evaluate news online critically so that they do not get mislead into providing their personal data which will lead to unwanted circumstances.

Cheers!

LikeLike

No problem Jian Wen! Always glad to be inform my friends about the Malware that lurks around the Internet. We are not 100% safe but the least we could do is be more aware of such cases so that we don’t put our personal information at risk of being compromised! I feel that more could be done on Social Networking sites. Even though with the existence of fact-checkers, implementing a strict rules will only criticise their profit position as Facebook stands to gain from fake-news because of sponsored posts. Advertising news, real or fake, increases these Social Media site’s revenue.

Hopefully more laws can be implemented in the future, we can only wait and see!

LikeLike

[…] 2. Marianne’s blog […]

LikeLike

[…] Marianne’s post highlights that automated bots can form part of our perspective and it is important that we are aware of them. It is good to see Facebook implementing algorithms to identify and remove automated bots, so as to reduce fake news from being spread (Wagner, 2017). On our part, we should also identify these bots, in order to burst our filter bubble. In this image, I summarised how we can identify these bots. […]

LikeLiked by 1 person

[…] Marianne’s post provided me with a new perspective when it comes to social media credibility. Automated social bots are everywhere on social media and studies have shown that 48 million twitter accounts could be bots! (Newberg, 2017) Surprised? These bots infiltrate social networks and gains users trust overtime to effectively influence the users. (Reynaud, 2017) Watch the video below on what social bots do. […]

LikeLike

[…] 2. Marianne’s blog […]

LikeLike

[…] Marianne […]

LikeLike

[…] was intrigued by Marianne’s post, as it talked about how bots can contribute and affect the popularity of political candidates. It […]

LikeLiked by 1 person